Virtual & extended reality production merges the physical and virtual worlds to create immersive environments for entertainment, education and events. But how is it done?

In our beginner’s guide, we take you through everything you need to know about virtual & xR production - step by step. Learn about the setup, systems and configurations required to produce next-level immersive content.

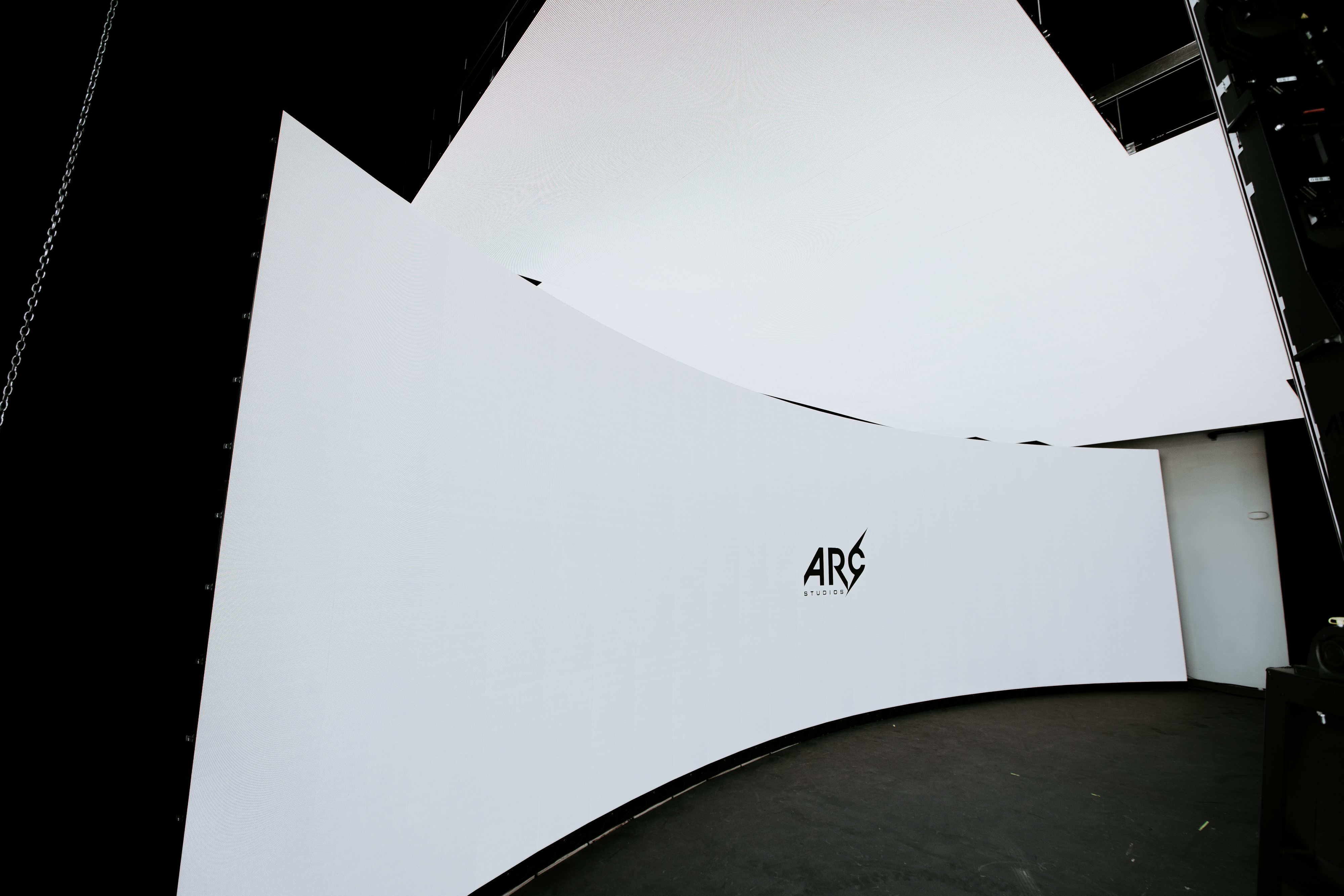

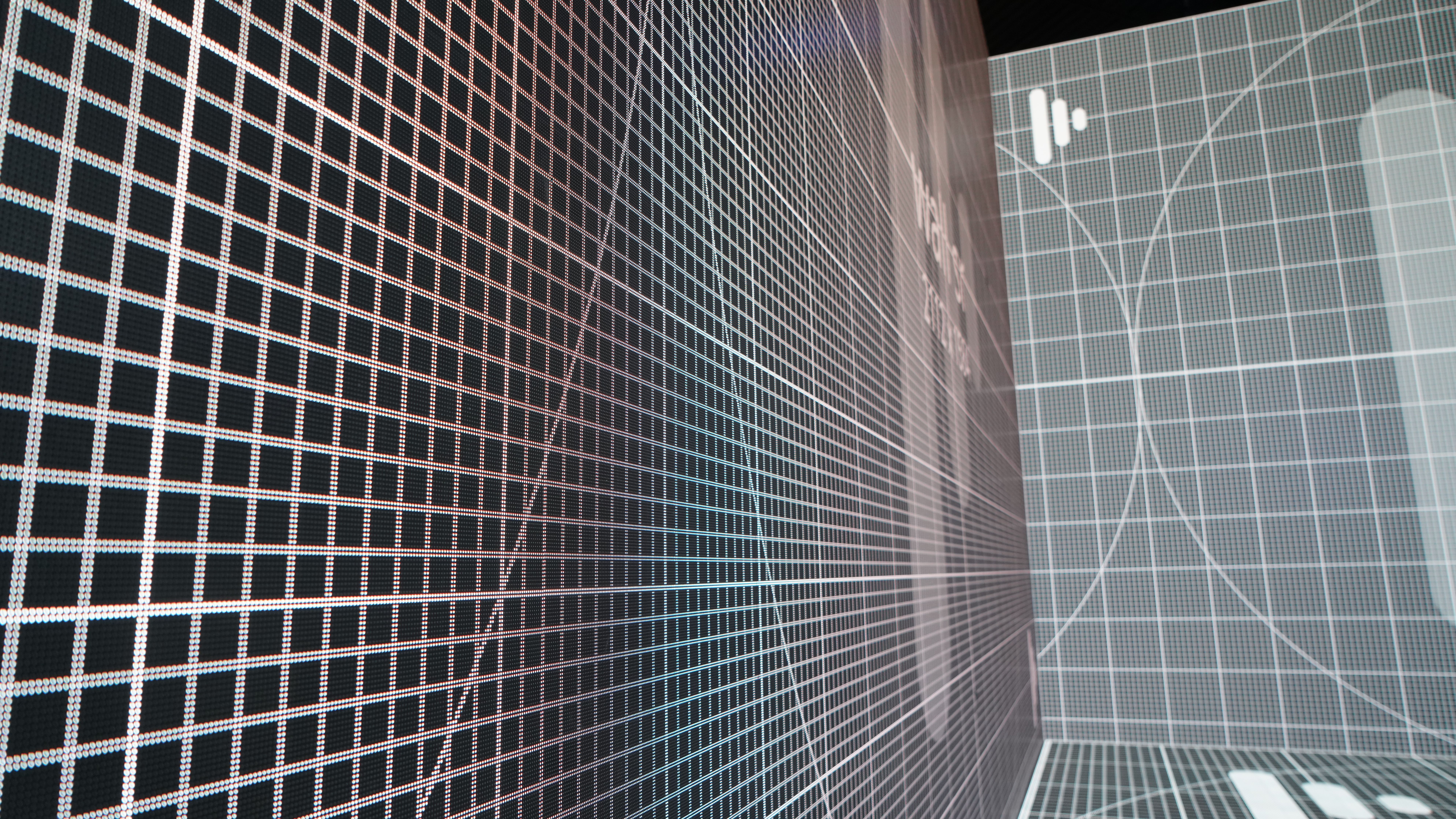

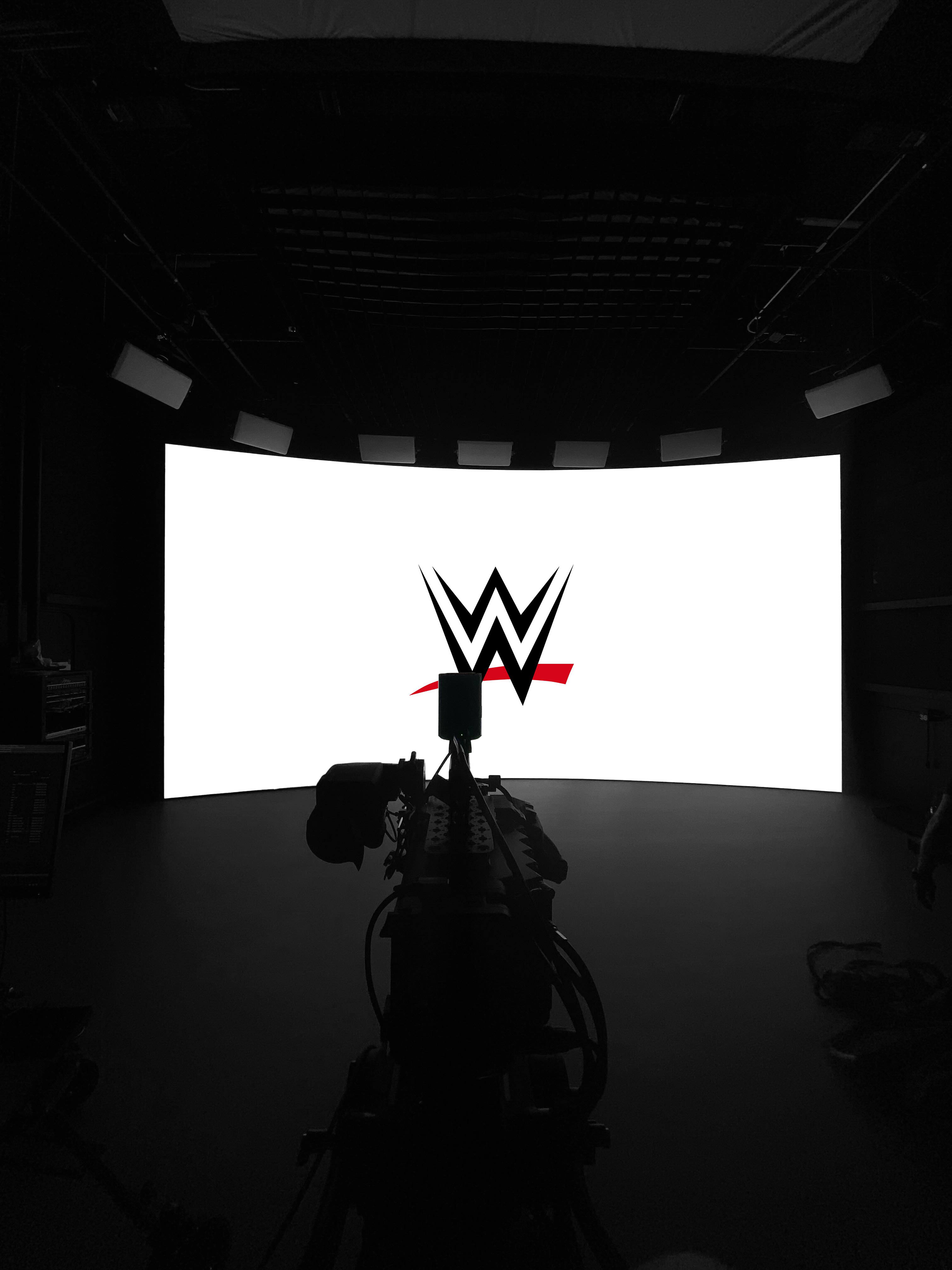

Starting with the setup, we are going to elaborate on the prominent LED Volume.

The LED Volume

The LED Volume, also called an LED stage, is where the magic of virtual production happens nowadays.

Think of it as the next-level green screen.